‘Bossware is coming for almost every worker’: the software you might not realize is watching you

Computer monitoring software is helping companies spy on their employees to measure their productivity – often without their consent

"When the job of a young east coast-based analyst – we’ll call him James – went remote with the pandemic, he didn’t envisage any problems. The company, a large US retailer for which he has been a salaried employee for more than half a decade, provided him with a laptop, and his home became his new office. Part of a team dealing with supply chain issues, the job was a busy one, but never had he been reprimanded for not working hard enough.

So it was a shock when his team was hauled in one day late last year to an online meeting to be told there was gaps in its work: specifically periods when people – including James himself, he was later informed – weren’t inputting information into the company’s database.

The number and array of tools now on offer to continuously monitor employees’ digital activity and provide feedback to managers is remarkable. Tracking technology can also log keystrokes, take screenshots, record mouse movements, activate webcams and microphones, or periodically snap pictures without employees knowing. And a growing subset incorporates artificial intelligence (AI) and complex algorithms to make sense of the data being collected.

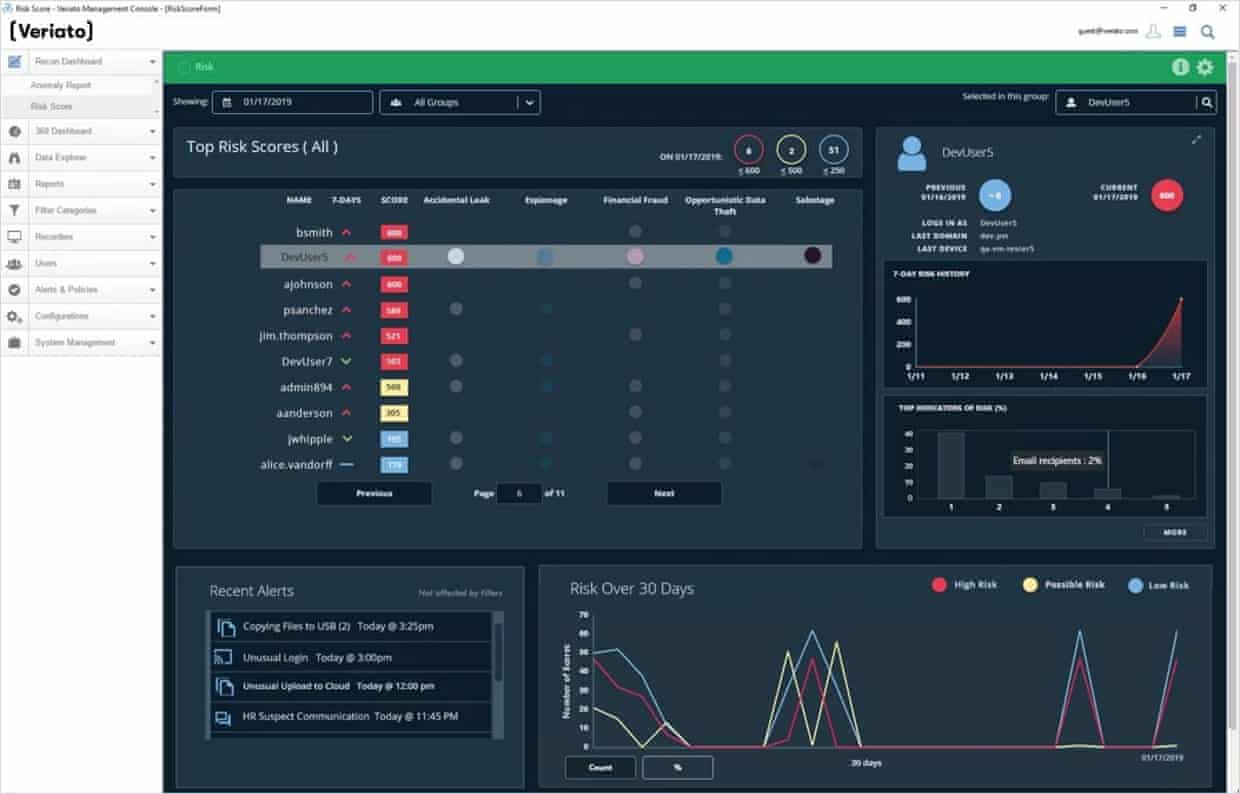

One AI monitoring technology, Veriato, gives workers a daily “risk score” which indicates the likelihood they pose a security threat to their employer. This could be because they may accidentally leak something, or because they intend to steal data or intellectual property.

The score is made up from many components, but it includes what an AI sees when it examines the text of a worker’s emails and chats to purportedly determine their sentiment, or changes in it, that can point towards disgruntlement. The company can then subject those people to closer examination.

“This is really about protecting consumers and investors as well as employees from making accidental mistakes,” says Elizabeth Harz, CEO.

Another company making use of AI, RemoteDesk, has a product meant for remote workers whose job requires a secure environment, because for example they are dealing with credit card details or health information. It monitors workers through their webcams with real-time facial recognition and object detection technology to ensure that no one else looks at their screen and that no recording device, like a phone, comes into view. It can even trigger alerts if a worker eats or drinks on the job, if a company prohibits it.

RemoteDesk’s own description of its technology for “work-from-home obedience” caused consternation on Twitter last year. (That language didn’t capture the company’s intention and has been changed, its CEO, Rajinish Kumar, told the Guardian.)

But tools that claim to assess a worker’s productivity seem poised to become the most ubiquitous.

> In late 2020, Microsoft rolled out a new product it called Productivity Score which rated employee activity across its suite of apps, including how often they attended video meetings and sent emails. A widespread backlash ensued, and Microsoft apologised and revamped the product so workers couldn’t be identified. But some smaller younger companies are happily pushing the envelope.

> Prodoscore, founded in 2016, is one. Its software is being used to monitor about 5000 workers at various companies. Each employee gets a daily “productivity score” out of 100 which is sent to a team’s manager and the worker, who will also see their ranking among their peers. The score is calculated by a proprietary algorithm that weighs and aggregates the volume of a worker’s input across all the company’s business applications – email, phones, messaging apps, databases.

Only about half of Prodoscore’s customers tell their employees they’re being monitored using the software (the same is true for Veriato). The tool is “employee friendly”, maintains CEO Sam Naficy, as it gives employees a clear way of demonstrating they are actually working at home. “[Just] keep your Prodoscore north of 70,” says Naficy. And because it is only scoring a worker based on their activity, it doesn’t come with the same gender, racial or other biases that human managers might, the company argues.

Prodoscore doesn’t suggest that businesses make consequential decisions for workers – for example about bonuses, promotions or firing – based on its scores. Though “at the end of the day, it’s their discretion”, says Naficy. Rather it is intended as a “complementary measurement” to a worker’s actual outputs, which can help businesses see how people are spending their time or rein in overworking.

Naficy lists legal and tech firms as its customers, but those approached by the Guardian declined to speak about what they do with the product. One, the major US newspaper publisher Gannett, responded that it is only used by a small sales division of about 20 people.

A video surveillance company named DTiQ is quoted on Prodoscore’s website as saying that declining scores accurately predicted which employees would leave.

Prodoscore shortly plans to launch a separate “happiness/wellbeing index” which will mine a team’s chats and other communications in an attempt to discover how workers are feeling. It would, for example, be able to forewarn of an unhappy employee who may need a break, Naficy claims.

But what do workers themselves think about being surveilled like this?

James and the rest of his team at the US retailer learned that, unbeknownst to them, the company had been monitoring their keystrokes into the database.

In the moment when he was being rebuked, James realized some of the gaps would actually be breaks – employees needed to eat. Later, he reflected hard on what had happened. While having his keystrokes tracked surreptitiously was certainly disquieting, it wasn’t what really smarted. Rather what was “infuriating”, “soul crushing” and a “kick in the teeth” was that the higher-ups had failed to grasp that inputting data was only a small part of his job, and was therefore a bad measure of his performance. Communicating with vendors and couriers actually consumed most of his time.

“It was the lack of human oversight,” he says. “It was ‘your numbers are not matching what we want, despite the fact that you have proven your performance is good’… They looked at the individual analysts almost as if we were robots.”

To critics, this is indeed a dismaying landscape. “A lot of these technologies are largely untested,” says Lisa Kresge, a research and policy associate at the University of California, Berkeley Labor Centre and co-author of the recent report Data and Algorithms at Work.

Productivity scores give the impression that they are objective and impartial and can be trusted because they are technologically derived – but are they? Many use activity as a proxy for productivity, but more emails or phone calls don’t necessarily translate to being more productive or performing better. And how the proprietary systems arrive at their scores is often as unclear to managers as it is to workers, says Kresge.

Moreover systems that automatically classify a worker’s time into “idle” and “productive” are making value judgments about what is and isn’t productive, notes Merve Hickok, research director at the Center for AI and Digital Policy and founder of AIethicist.org. A worker who takes time to train or coach a colleague might be classified as unproductive because there is less traffic originating from their computer, she says. And productivity scores that force workers to compete can lead to them trying to game the system rather than actually do productive work.

AI models, often trained on databases of previous subjects’ behaviour, can also be inaccurate and bake in bias. Problems with gender and racial bias have been well documented in facial recognition technology. And there are privacy issues. Remote monitoring products that involve a webcam can be particularly problematic: there could be a clue a worker is pregnant (a crib in the background), of a certain sexual orientation or living with an extended family. “It gives employers a different level of information than they would have otherwise,” says Hickok.

There is also a psychological toll. Being monitored lowers your sense of perceived autonomy, explains Nathanael Fast, an associate professor of management at the University of Southern California who co-directs its Psychology of Technology Institute. And that can increase stress and anxiety. Research on workers in the call centre industry – which has been a pioneer of electronic monitoring – highlights the direct relationship between extensive monitoring and stress.

Computer programmer and remote work advocate David Heinemeier Hansson has been waging a one-company campaign against the vendors of the technology. Early in the pandemic he announced that the company he co-founded, Basecamp, which provides project management software for remote working, would ban vendors of the technology from integrating with it.

The companies tried to push back, says Hansson – “very few of them see themselves as purveyors of surveillance technology” – but Basecamp couldn’t be complicit in supporting technology that resulted in workers being subjected to such “inhuman treatment”, he says. Hansson isn’t naive enough to think his stance is going to change things. Even if other companies followed Basecamp’s lead, it wouldn’t be enough to quench the market.

What is really needed, argue Hansson and other critics, is better laws regulating how employers can use algorithms and protect workers’ mental health.

In the US, except in a few states that have introduced legislation, employers aren’t even required to specifically disclose monitoring to workers. (The situation is better in the UK and Europe, where general rights around data protection and privacy exist, but the system suffers from lack of enforcement.)

Hansson also urges managers to reflect on their desire to monitor workers. Tracking may catch that “one goofer out of 100” he says. “But what about the other 99 whose environment you have rendered completely insufferable?”

As for James, he is looking for another job where “toxic” monitoring habits aren’t a feature of work life."

No comments:

Post a Comment