This sci-fi game predicted our current AI landscape four years ago

Skip ahead ". . .There’s a reason all of this especially intrigues me. That’s because of a little visual novel called Eliza. Released in 2019, the indie gem quietly predicted AI’s troubling move into the mental health space. It’s an excellent cautionary tale about the complexities of automating human connection — one that tech entrepreneurs could learn a lot from.

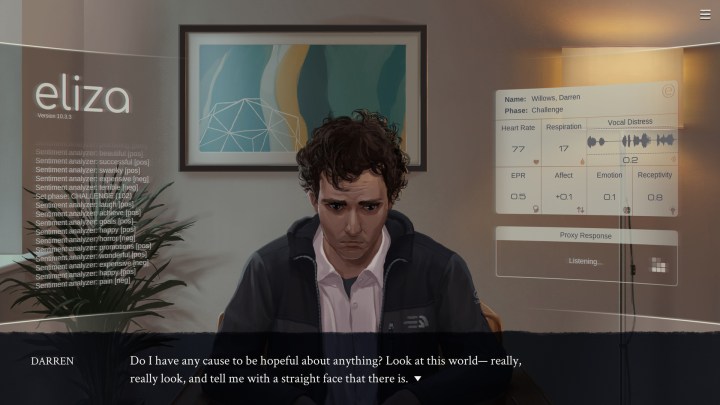

Welcome to Eliza

Set in Seattle, Eliza follows a character named Evelyn Ishino-Aubrey who begins working at a new tech venture created by a fictional, Apple-like megacorporation called Skandha. The company has created a virtual counseling app, called Eliza, that offers AI-guided therapy sessions to users at a relatively affordable price.

Eliza isn’t just a faceless chatbot, though. In order to retain the human element of face-to-face therapy, the app employs human proxies who sit with clients in person and read generated responses from the bot in real time. Skandha claims it has its methodology down to a science, so proxies are forbidden from deviating from the script in any way. They’re simply there to add a tangible face to the advice the machine spits out.

The game resists the urge to present that idea as an over-the-top-dystopian concept. Instead, it opts for a tone grounded in realism, not unlike that of Spike Jonze’s Her. That allows it to ask some serious and nuanced questions about automating human interactions that were ahead of their time. The five-hour story asks if an AI application like that is a net benefit, making something as expensive as therapy more approachable, or simply an exploitive business decision by big tech that trades in human interaction for easy profits.

Players explore those questions through Eliza’s visual novel systems. Interaction is minimal here, with players simply choosing dialogue options for Evelyn. That has a major impact on her sessions, though. Throughout the story, Evelyn meets with a handful of recurring clients subscribed to the service. Some are simply there to monologue about the low-stakes drama in their life, but others are coming to the service with more serious problems. No matter the severity of one’s individual situation, Eliza spits out the same flat script for Evelyn to read, asking some questions that repeat throughout sessions and prescribing breathing exercises and medication.

The more Evelyn gets invested in the lives of her clients, the more she begins to see the limits of the tech. Some of Eliza’s go-to advice isn’t a one-size-fits-all solution to every problem, and more troubled clients begin pleading for real help from an actual human. Players are given the choice to go off script and let Evelyn take matters into her own hands, a move that has some serious implications for both her job and the well-being of her clients.

It isn’t always the right answer. While some of her advice gives clients the help they need, others find themselves spiraling even more. Her words can get twisted around in ways she didn’t expect, something that the safe algorithm of Eliza is built to protect against.

No comments:

Post a Comment