The Growing Energy Footprint of Artificial Intelligence

Google is bullish about AI helping to fight global warming, but it's also candid about the energy-thirsty tech driving up the company's own emissions for now.

Why it matters: This climate yin-yang is on display in the tech giant's latest environmental report.

- Google's corporate emissions rose another 13% last year and are up 48% compared to their 2019 baseline.

- That's partly because data centers serving AI and other applications are using more power.

- But it also highlights ways they're moving to make AI infrastructure far more efficient.

- And outside their own operations, the report touts Google's AI products that cut emissions, such as tools that cities use to improve traffic.

The Growing Energy Footprint of Artificial Intelligence

- October 11, 2023

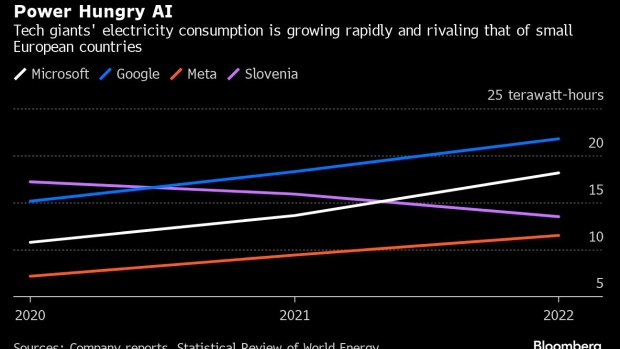

AI servers could use 0.5% of the world’s electrical generation by 2027. For context, data centers currently use around 1% of global electrical generation.

In recent years, data center electricity consumption has accounted for a relatively stable 1% of global electricity use, excluding cryptocurrency mining.

There is increasing apprehension that the computational resources necessary to develop and maintain AI models and applications could cause a surge in data centers' contribution to global electricity consumption.

- Alphabet’s chairman indicated in February 2023 that interacting with an LLM could “likely cost 10 times more than a standard keyword search."

- a standard Google search reportedly uses 0.3 Wh of electricity, this suggests an electricity consumption of approximately 3 Wh per LLM interaction.

- This figure aligns with SemiAnalysis’ assessment of ChatGPT’s operating costs in early 2023, which estimated that ChatGPT [requires] 2.9 Wh per request.

No comments:

Post a Comment