First one highlight and then a few more

Utah’s Governor Live Streams Signing Of Unconstitutional Social Media Bill On All The Social Media Platforms He Hates

from the utah-gets-it-wrong-again dept

On Thursday, Utah’s governor Spencer Cox officially signed into law two bills that seek to “protect the children” on the internet. He did with a signing ceremony that he chose to stream on nearly every social media platform, despite his assertions that those platforms are problematic.

Yes, yes, watch live on the platforms that your children shouldn’t use, lest they learn that their governor eagerly supports blatantly unconstitutional bills that suppress the free speech rights of children, destroy their privacy, and put them at risk… all while claiming he’s doing this to “protect them.”

✓ The decision to sign the bills is not a surprise. We talked about the bills earlier this year, noting just how obviously unconstitutional they are, and how much damage they’d do to the internet.

The bills (SB 152 and HB 311) do a few different things, each of which is problematic in its own special way:

- Bans anyone under 18 from using social media between 10:30pm and 6:30am.

- Requires age verification for anyone using social media while simultaneously prohibiting data collection and advertising on any “minor’s” account.

- Requires social media companies to wave a magic wand and make sure no kids get “addicted” with addiction broadly defined to include having a preoccupation with a site that causes “emotional” harms.

- Requires parental consent for anyone under the age of 18 to even have a social media account.

- Requires social media accounts to give parents access to their kids accounts.

Leaving aside the fun of banning data collection while requiring age verification (which requires data collection), the bill is just pure 100% nanny state nonsense.

✓ Children have their own 1st Amendment rights, which this bill ignores. It assumes that teenagers have a good relationship with their parents. Hell, it assumes that parents have any relationship with their kids, and makes no provisions for how to handle cases where parents are not around, have different names, are divorced, etc.

✓ Also, the lack of data collection combined with the requirement to prevent addiction creates a uniquely ridiculous scenario in which these companies have to make sure they don’t provide features and information that might lead to “addiction,” but can’t monitor what’s happening on those accounts, because it might violate the data collection restrictions.

As far as I can tell, the bill both requires social media companies to hide dangerous or problematic content from children, and blocks their ability to do so.

Because Utah’s politicians have no clue what they’re doing.

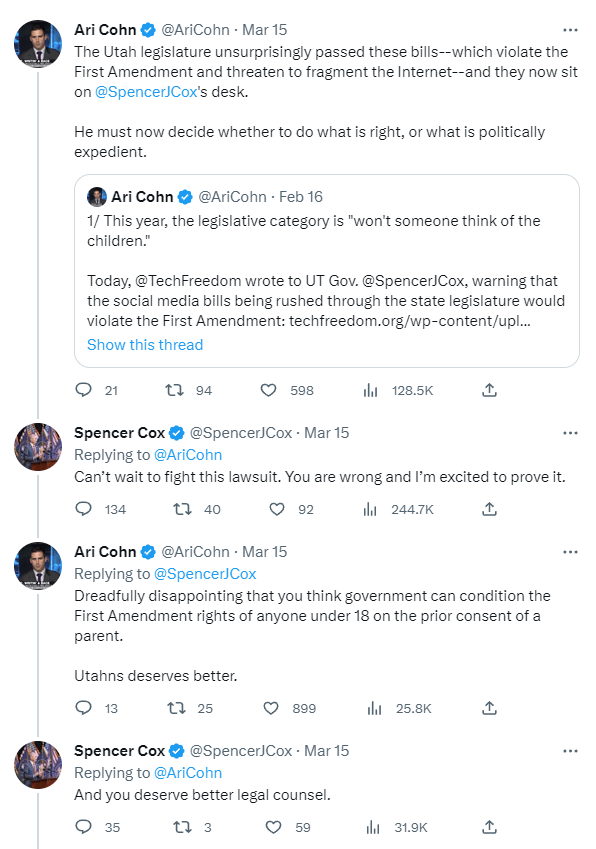

Meanwhile, Governor Cox seems almost gleeful about just how unconstitutional his bill is. After 1st Amendment/free speech lawyer Ari Cohn laid out the many Constitutional problems with the bill, Cox responded with a Twitter fight, by saying he looked forward to seeing Cohn in court.

Perhaps Utah’s legislature should be banning itself from social media, given how badly they misunderstand basically everything about it. They could use that time to study up on the 1st Amendment, because they need to. Badly.

Anyway, in a de facto admission that these laws are half-baked at best, they don’t go into effect until March of 2024, a full year, as they seem to recognize that to avoid getting completely pantsed in court, they might need to amend them. But they are going to get pantsed in court, because I can almost guarantee there will be constitutional challenges to these bills before long.

Filed Under: 1st amendment, age verification, children, parental permission, parents, privacy, protect the children, social media, social media bans, spencer cox, utah

How Forcing TikTok To Completely Separate Its US Operations Could Actually Undermine National Security

from the when-government-demands-backfire dept

Back in August 2020, the Trump White House issued an executive order purporting to ban TikTok, citing national security concerns. The ban ultimately went nowhere — but not before TikTok and Oracle cobbled together “Project Texas” as an attempt to appease regulators’ privacy worries and keep TikTok available in the United States.

The basic gist of Project Texas, Lawfare reported earlier this year, is that TikTok will stand up a new US-based subsidiary named TikTok US Data Security (USDS) to house business functions that touch US user data, or which could be sensitive from a national security perspective (like content moderation functions impacting Americans). Along with giving the government the right to conduct background checks on potential USDS hires (and block those hires from happening!), TikTok committed as part of Project Texas to host all US-based traffic on Oracle-managed servers, with strict and audited limits on how US data could travel to non-US-based parts of the company’s infrastructure. Needless to say, Oracle stands to make a considerable amount of money from the whole arrangement.

Yesterday’s appearance by TikTok CEO Shou Zi Chew before the House Energy and Commerce Committee shows that even those steps, and the $1.5 billion TikTok are reported to have spent standing up USDS, may prove to be inadequate to stave off the pitchfork mob calling for TikTok’s expulsion from the US. The chair of the committee, Representative Cathy Rodgers of Washington, didn’t mince words in her opening statement, telling Chew, “Your platform should be banned.”

Even as I believe at least some of the single-minded focus on TikTok is a moral panic driven by xenophobia, not hard evidence, I share many of the national security concerns raised about the app.

Chief among these concerns is the risk of exfiltration of user data to China — which definitely happened with TikTok, and is definitely a strategy the Chinese government has employed before with other American social networking apps, like Grindr. Espionage is by no means a risk unique to TikTok; but the trove of data underlying the app’s uncannily prescient recommendation algorithm, coupled with persistent ambiguities about ByteDance’s relationship with Chinese government officials, pose a legitimate set of questions about how TikTok user data might be used to surveil or extort Americans.

✓ But there’s also the more subtle question of how an app’s owners can influence what people do or don’t see, and which narratives on which issues are or aren’t permitted to bubble to the top of the For You page. Earlier this year, Forbes reported the existence of a “heating” function available to TikTok staff to boost the visibility of content; what’s to stop this feature from being used to put a thumb on the scale of content ranking to favor Chinese government viewpoints on, say, Taiwanese sovereignty? Chew was relatively unambiguous on this point during the hearing, asserting that the platform does not promote content at the request of the Chinese government, but the opacity of the For You page makes it hard to know with certainty why featured content lands (or doesn’t land) in front of viewers.

Whether you take Chew’s word for it that TikTok hasn’t done any of the nefarious things members of Congress think it has — and it’s safe to say that members of the Energy and Commerce Committee did not take him at his word — the security concerns stemming from the possibility of TikTok’s deployment as a tool of Chinese foreign policy are at least somewhat grounded in reality. The problem is that solutions like Project Texas, and a single-minded focus on China, may end up having the counterproductive result of making the app less resilient to malign influence campaigns targeting the service’s 1.5 billion users around the world.

A key part of how companies, TikTok included, expose and disrupt coordinated manipulation is by aggregating an enormous amount of data about users and their behavior, and looking for anomalies. In infosec jargon, we call this “centralized telemetry” — a single line of sight into complex technical systems that enables analysts to find a needle (for instance, a Russian troll farm) in the haystack of social media activity. Centralized telemetry is incredibly important when you’re dealing with adversarial issues, because the threat actors you’re trying to find usually aren’t stupid enough to leave a wide trail of evidence pointing back to them.

Here’s a specific example of how this works:

In September 2020, during the first presidential debate of the 2020 US elections, my team at Twitter found a bunch of Iranian accounts with an awful lot to say about Joe Biden and Donald Trump. I found the first few — I wish I was joking about this — by looking for Twitter accounts registered with phone numbers with Iran’s +98 country code that tweeted with hashtags like “#Debate2020.” Many were real Iranians, sharing their views on American politics; others were, well, this:

Yes, sometimes even government-sponsored trolling campaigns are this poorly done.

As we dug deeper into the Iranian campaign, we noticed that similar-looking accounts (including some using the same misspelled hashtags) were registered with phone numbers in the US and Europe rather than Iran, and were accessing Twitter through different proxy servers and VPNs located all over the world. Many of the accounts we uncovered looked, to Twitter’s systems, like they were based in Germany. It was only by comparing a broad set of signals that we were able to determine that these European accounts were actually Iranian in origin, and part of the same campaign.

Individually, the posts from these accounts didn’t clearly register as being part of a state-backed influence operation. They might be stupid, offensive, or even violent — but content alone couldn’t expose them. Centralized telemetry helped us figure out that they were part of an Iranian government campaign.

Let’s turn back to TikTok, though:

TikTok… do a lot of this work right now, too! They’ve hired a lot of very smart people to work on coordinated manipulation, fake engagement, and what they call “covert influence operations” — and they’re doing a pretty good job! There’s a ton of data about their efforts in TikTok’s (also quite good!) transparency report. Did you know TikTok blocks an average of 1.8 billion fake likes per month? (That’s a lot!) Or that they remove an average of more than half a million fake accounts a day? (That’s also a lot!) And to their credit, TikTok’s state-affiliated media labels appear on outlets based in China. TikTok have said for years that they invest heavily in addressing manipulation and foreign interference in elections — and their own data shows that that’s generally true.

Now, you can ask very reasonable questions about whether TikTok’s highly capable threat investigators would expose a PRC-backed covert influence operation if they found one — the way Twitter and Facebook did with a campaign associated with the US Department of Defense in 2022. I personally find it a little… fishy… that the company’s Q3 2022 transparency report discloses a Taiwanese operation, but not, say, the TikTok incarnation of the unimaginably prolific, persistent, and platform-agnostic Chinese influence campaign Spamouflage Dragon (which Twitter first attributed to the Chinese government in 2019, and which continues to bounce around every major social media platform).

But anyway: the basic problem with Project Texas and the whole “we’re going to air-gap US user data from everything else” premise is that you’re establishing geographic limits around a problem that does not respect geography — and doing so meaningfully hinders the company’s ability to find and shut down the very threats of malign interference that regulators are so worried about.

✓ Let’s assume that USDS staff have a mandate to go after foreign influence campaigns targeting US users. The siloed nature of USDS means they likely can only do that work using data about the 150 million or so US-based users of TikTok, a 10% view of the overall landscape of activity from TikTok’s 1.5 billion global users. Their ability to track persistent threat actors as they move across accounts, phone numbers, VPNs, and hosting providers will be constrained by the artificial borders of Project Texas.

✓ (Or, alternatively, do USDS employees have unlimited access to TikTok’s global data, but not vice versa? How does that work under GDPR? The details of Project Texas remain a little unclear on this point.)

✓✓ As for the non-USDS parts of TikTok, otherwise known as “the overwhelming majority of the platform,” USDS turns any US-based accounts into a data void. TikTok’s existing threat hunting team will be willfully blind to bad actors who host their content in the US — which, not for nothing, they definitely will do as a strategy for exploiting this convoluted arrangement.

USDS may seem like a great solution if your goal is not to get banned in the US (although yesterday’s hearing suggests that it may actually be a failure when it comes to that, too). But it’s a terrible solution if your goal is to let threat investigators find the bad actors actually targeting the people on your platform. Adversarial threats don’t respect geographic limits; they seek out the lowest-friction, lowest-risk ways to carry out their objectives. Project Texas raises the barriers for TikTok to find and disrupt inauthentic behavior, and makes it less likely that the company’s staff will be successful in their efforts to find and shut down these campaigns. I struggle to believe the illusory benefits of a US-based data warehouse exceed the practical costs the company necessarily takes on with this arrangement.

At the end of the day, Project Texas’s side effects are another example of the privacy vs security tradeoffs that come up again and again in the counter-influence operations space. This work just isn’t possible to do without massive troves of incredibly privacy-sensitive user data and logs. Those same logs become a liability in the event of a data breach or, say, a rogue employee looking to exfiltrate information about activists to a repressive government trying to hunt them down. It’s a hard problem for any company to solve — much less one doing so under the gun of an impending ban, like TikTok have had to.

But, whatever your anxieties about TikTok (and I have many!), banning it, and the haphazard Project Texas reaction to a possible ban, won’t necessarily help national security, and could make things worse. In an effort to stave off Chinese surveillance and influence on American politics, Project Texas might just open the door for a bunch of other countries to be more effective in doing so instead.

Yoel Roth is a technology policy fellow at UC Berkeley, and was the head of Trust & Safety at Twitter.

Filed Under: centralized telemetry, china, content moderation, coordinated manipulation, fake engagement, foreign influence operations, national security, project texas, threat assessment, trust & safety

Companies: oracle, tiktok, tiktok usds

Daily Deal: The ChatGPT By OpenAI Training Bundle

from the good-deals-on-cool-stuff dept

The ChatGPT By OpenAI Training Bundle has four courses to introduce you to ChatGPT. You will learn the fundamentals of working with ChatGPT, a state-of-the-art language model developed by OpenAI. You’ll gain hands-on experience using ChatGPT to generate text that is coherent and natural, and you will explore the many possibilities for using this tool in a variety of applications. The bundle is on sale for $19.97.

Note: The Techdirt Deals Store is powered and curated by StackCommerce. A portion of all sales from Techdirt Deals helps support Techdirt. The products featured do not reflect endorsements by our editorial team.

Filed Under: daily deal

Jim Jordan Weaponizes The Subcommittee On The Weaponization Of The Gov’t To Intimidate Researchers & Chill Speech

from the where-are-the-jordan-files? dept

As soon as it was announced, we warned that the new “Select Subcommittee on the Weaponization of the Federal Government,” (which Kevin McCarthy agreed to support to convince some Republicans to support his speakership bid) was going to be not just a clown show, but one that would, itself, be weaponized to suppress speech (the very thing it claimed it would be “investigating.”)

To date, the subcommittee, led by Jim Jordan, has lived down to its expectations, hosting nonsense hearings in which Republicans on the subcommittee accidentally destroy their own talking points and reveal themselves to be laughably clueless.

Anyway, it’s now gone up a notch beyond just performative beclowing to active maliciousness.

> This week, Jordan sent information requests to Stanford University, the University of Washington, Clemson University and the German Marshall Fund, demanding they reveal a bunch of internal information, that serves no purpose other than to intimidate and suppress speech. You know, the very thing that Jim Jordan pretends his committee is “investigating.”

House Republicans have sent letters to at least three universities and a think tank requesting a broad range of documents related to what it says are the institutions’ contributions to the Biden administration’s “censorship regime.”

As we were just discussing, the subcommittee seems taken in by Matt Taibbi’s analysis of what he’s seen in the Twitter files, despite nearly every one of his “reports” on them containing glaring, ridiculous factual errors that a high school newspaper reporter would likely catch. I mean, here he claims that the “Disinformation Governance Board” (an operation we mocked for the abject failure of the administration in how it rolled out an idea it never adequately explained) was somehow “replaced” by Stanford University’s Election Integrity Project.

✓ Except the Disinformation Governance Board was announced, and then disbanded, in April and May of 2022. The Election Integrity Partnership was very, very publicly announced in July of 2020. Now, I might not be as decorated a journalist as Matt Taibbi, but I can count on my fingers to realize that 2022 comes after 2020.

Look, I know that time has no meaning since the pandemic began. And that journalists sometimes make mistakes (we all do!), but time is, you know, not that complicated. Unless you’re so bought into the story you want to tell you just misunderstand basically every last detail.

The problem, though, goes beyond just getting simple facts wrong (and the list of simple facts that Taibbi gets wrong is incredibly long). It’s that he gets the less simple, more nuanced facts, even more wrong. Taibbi still can’t seem to wrap his head around the idea that this is how free speech and the marketplace of ideas actually works. Private companies get to decide the rules for how anyone gets to use their platform. Other people get to express their opinions on how those rules are written and enforced.

As we keep noting, the big revelations so far (if you read the actual documents in the Twitter Files, and not Taibbi’s bizarrely disconnected-from-what-he’s-commenting-on commentary), is that Twitter’s Trust and Safety team was… surprisingly (almost boringly) competent. I expected way more awful things to come out in the Twitter Files. I expected dirt. Awful dirt. Embarrassing dirt. Because every company of any significant size has that. They do stupid things for stupid fucking reasons, and bend over backwards to please certain constituents.

But… outside of a few tiny dumb decisions, Twitter’s team has seemed… remarkably competent. They put in place rules. If people bent the rules, they debated how to handle it. They sometimes made mistakes, but seemed to have careful, logical debates over how to handle those things. They did hear from outside parties, including academic researchers, NGOs, and government folks, but they seemed quite likely to mock/ignore those who were full of shit (in a manner that pretty much any internal group would do). It’s shockingly normal.

I’ve spent years talking to insiders working on trust and safety teams at big, medium, and small companies. And, nothing that’s come out is even remotely surprising, except maybe how utterly non-controversial Twitter’s handling of these things was. There’s literally less to comment on then I expected. Nearly every other company would have a lot more dirt.

Still, Jordan and friends seem driven by the same motivation as Taibbi, and they’re willing to do exactly the things that they claim they’re trying to stop: using the power of the government to send threatening intimidation letters that are clearly designed to chill academic inquiry into the flow of information across the internet.

By demanding that these academic institutions turn over all sorts of documents and private communications, Jordan must know that he’s effectively chilling the speech of not just them, but any academic institution or civil society organization that wants to study how false information (sometimes deliberately pushed by political allies of Jim Jordan) flow across the internet.

It’s almost (almost!) as if Jordan wants to use the power of his position as the head of this subcommittee… to create a stifling, speech-suppressing, chilling effect on academic researchers engaged in a well-established field of study.

Can’t wait to read Matt Taibbi’s report on this sort of chilling abuse by the federal government. It’ll be a real banger, I’m sure. I just hope he uses some of the new Substack revenue he’s made from an increase in subscribers to hire a fact checker who knows how linear time works.

Filed Under: academic research, chilling effects, congress, intimidation, jim jordan, matt taibbi, nonsense peddlers, research, twitter files, weaponization subcommittee

Companies: clemson university, german marshall fund, stanford, twitter, university of washington

Biden Administration Makes It Clear Broadband Consumer Protection Has Never Been Much Of A Priority

from the do-not-pass-go,-do-not-collect-$200 dept

Earlier this month we noted how a successful, often homophobic smear campaign scuttled the nomination of popular reformer Gigi Sohn to the FCC. The GOP and telecom sector, as usual, worked in close collaboration to spread all manner of lies about Sohn, including claims she was an unhinged radical that hated Hispanics, cops, puppies, and freedom.

But there was no shortage of blame to be had on the Democratic side as well.

✓ The shock and awe Khan nomination and promotion, the Biden administration waited nine months to even nominate Sohn, giving industry ample runway to create its ultimately successful campaign. Maria Cantwell buckled to repeated GOP requests for unnecessary show hearings, used to push false claims about Sohn that the industry had seeded in the press via various nonprofits. Chuck Schumer failed to whip up votes. Senators Masto, Kelly, and Manchin all buckled to industry fear campaigns, preventing a swift 51 vote Senate confirmation.

And nobody in the Biden administration thought it was particularly important to provide any meaningful public messaging support as Sohn faced down a relentless, industry smear campaign, alone. The entire process from beginning to end was a hot, incompetent mess.

And from every indication, there’s also no real evidence that the Biden administration had a plan B in the wake of Sohn’s nomination falling apart. Weeks after the fact and the White House still hasn’t pulled Sohn’s name from consideration or proposed a replacement candidate:

In a note to financial types, former top FCC official Blair Levin, now a media analyst, said a growing list of people apparently interested in the open seat suggests it would be a fair assumption that the White House did not have a plan B. “It may be sometime before it selects a new nominee, further delaying the moment when the Democrats obtain an FCC majority,” Levin said.

Given this was the industry’s entire goal, I’m sure they’re pleased.

AT&T, Verizon, Comcast, and News Corporation want to keep the nation’s top telecom and media regulator gridlocked at 2-2 commissioners, so it can’t take action on any issues deemed too controversial to industry, whether that’s restoring net neutrality, forcing broadband providers to be more transparent about pricing, or restoring media consolidation limits stripped away during the Trump era.

Whoever is chosen to replace Sohn will surely be more friendly to industry in a bid to avoid a repeat. Assuming that person is even seated, it won’t be until much later this year, at which point they’ll have very little time to implement any real reform before the next presidential election. The policies that will be prioritized probably won’t be the controversial or popular ones, like net neutrality.

I’m not sure who has been giving Biden broadband policy advice of late, but it’s pretty clear that for all of the administration’s talk about “antitrust reform” (which has included some great work on “right to repair”), a functioning FCC with a voting majority and competent broadband consumer protection has never actually been much of a priority.

Yes, the Biden administration has done good work on pushing for an infrastructure bill that will soon throw $45 billion at industry to address the digital divide. But most of that money will be going, as usual, to entrenched local monopolies that helped create the divide in the first place through relentless efforts to crush competition and stifle nearly all competent oversight.

And without a voting majority, the agency will have a steeper uphill climb when it comes to basic things like shoring up broadband mapping, or holding big ISPs accountable should they provide false broadband coverage data to the FCC. The current FCC says all the right things about that pesky “digital divide,” but its leaders are generally terrified to even mention that telecom monopolies exist, much less propose any meaningful strategy to undermine their power.

It seems clear that Biden’s advisors don’t really think the FCC’s role as consumer watchdog is important (they’ve shoveled a lot of the heavy lifting to the NTIA), or didn’t think fighting over the FCC’s consumer protection authority was worthwhile. And given the current DC myopic policy focus on Big Tech, having a functioning media and telecom regulator willing and able to hold the nation’s hugely unpopular telecom monopolies accountable has easily just… fallen to the cutting room floor.

There’s a reason that Americans pay some of the most expensive prices for mediocre broadband in the developed world. There’s a reason that 83 million Americans live under a broadband monopoly. And it’s in no small part thanks to a feckless, captured FCC, and politicians who don’t have the backbone to stand up to major campaign contributors bone-grafted to our intelligence gathering apparatus.

Filed Under: 5g, antitrust reform, biden, broadband, digital divide, fcc, fiber, gigabit, gigi sohn, high speed internet, jessica rosenworcel, monopoly, regulatory capture, telecom

Leaked Doc From Microsoft To UK’s CMA Says ’10 Years Enough For Sony To Make Its Own CoD’

from the 10-year-sunset dept

Well, well. The fight between Sony and Microsoft over the latter’s proposed acquisition of Activision Blizzard continues to get more and more interesting. As three regulatory bodies have been poking at the deal — the European Commission for the EU, the Competition and Market Authority (CMA) in the UK, and the Federal Trade Commission (FTC) in the States — Microsoft’s featured attempt to appease the concerns over Call of Duty suddenly going exclusive has been its inking of 10 year deals to keep the series multi-platform. This seems to have placated the EU thus far, though its impact on the CMA and FTC remains to be seen. The idea, though, is that it is a demonstration of Microsoft’s commitment to keep CoD multiplatform generally. As I have pointed out in repeated posts, that doesn’t necessarily make sense. After all, Microsoft could be playing the long game, inking these deals to get the purchase done with plans to yank the series back to an exclusive after the ten year deals expire.

And if you read between the lines, Microsoft may have tipped its hand on that to the CMA in a recent leak of testimony from the company.

As spotted by VGC, Microsoft argued in a supplemental response that its 10-year proposal to keep Call of Duty available on PlayStation 5 and future Sony consoles is plenty of time and wouldn’t leave the hardware manufacturer on a “cliff edge” once it expires. Why not? Because Sony can use that time to make its own version of the best-selling military shooter.

“Microsoft considers that a period of 10 years is sufficient for Sony, as a leading publisher and console platform, to develop alternatives to CoD. […] The 10-year term will extend into the next console generation. […] Moreover, the practical effect of the remedy will go beyond the 10-year period, since games downloaded in the final year of the remedy can continue to be played for the lifetime of that console (and beyond, with backwards compatibility).”

There are plenty of clues to go off in the quoted statement from Microsoft to the CMA. The reference to developing alternatives is what most folks are keying in on. While not explicit, what is obviously implied there is that over 10 years Sony can develop a CoD competitor such that it need not worry if the series no longer appears on Sony’s PlayStations.

For me, though, I got laser-focused on the reference to “the final year.” Final year of what? This particular 10 year agreement? The final year of Microsoft having CoD titles released as multi-platform? Nowhere do I see any reference to suggestions that these deals can be renewed after the 10 years have expired. With that lack of clarity otherwise, it sure feels like this is talking about the end of the series being multi-platform.

As to Microsoft suggesting that Sony go build a competitor to CoD, well, why couldn’t Microsoft do that instead of gobbling up Activision?

“We’re not good enough” has actually been Microsoft’s line throughout these messy proceedings, however. One of its main arguments rests on the idea that, after being trounced by Sony and the PS4 for years, a massive acquisition is actually one of the only ways to disrupt the marketplace and create more competition. The implication has effectively been that Microsoft can’t make hits on its own so it needs to buy them instead.

None of this changes the simple fact that Sony has, with some recent deviations, traditionally been more than happy to keep a ton of its first- and third-party titles exclusive to the PlayStation. Sony also has absolutely trounced Microsoft in the console market year after year.

There are no good guys here, in other words. But as far as this acquisition is concerned, Microsoft’s comments above sure should have regulators raising their eyebrows.

Filed Under: antitrust, call of duty, competition, uk

Companies: activision blizzard, microsoft, sony

Middle School Sued After Getting Stupid About ‘Justice For Lil Pickle’ T-Shirts Worn By Students

from the feel-free-to-craft-your-own-in-a-pickle-joke dept

A middle school in California has screwed up. And now it’s getting taken to court. Hillel Aron gives us the background over at Courthouse News Service:

It was, perhaps, inevitable that the strange incident at a Ventura County middle school last year when dozens of students were banned from wearing T-shirts reading “Justice for Lil Pickle,” would result in a First Amendment lawsuit.

It began, innocently enough, with a Friday lunchtime recital by student Dylan Arevalo, otherwise known as Lil Pickle. The day before, Arevalo announced on Instagram that he would be performing his original song, “Crack is Wack,” along with two other students. Buzz for the performance was stronger than anyone would have imagined — hundreds of middle schoolers showed up for the outdoor concert. School staff found the size of the crowd troubling, according to the lawsuit.

The lawsuit [PDF], filed in Ventura County by student “G.K.”, fills the rest of the details.

> The first stupid thing was calling the cops. I know administrators tend to get concerned the moment students decide to start exercising their freedom of association rights, but possibly some more due diligence than “oh noes a crowd is forming” was needed before involving law enforcement.

> Then there’s the follow-up activities, like confiscating phones, demanding students delete recordings of the aborted performance and the confluence of cops and administrators. In the end, “Lil Pickle” was sent home for the rest of the day, which set off another, more entrepreneurial, chain of events.

G.K. borrowed his friend’s heat press and cranked out several dozen shirts featuring the phrase “Justice for Lil Pickle.” According to the lawsuit, he sold these for $15 each, with some of the purchasers being teachers at the school.

When G.K. and his younger sister (along with other students) wore their T-shirts to school, they were ordered to cover them up or remove them. G.K. refused. The school called his father, who happens to be Santa Barbara Police Department captain(!) in hopes of having him talk some sense into his child. Instead, the officer said he supported G.K.(!!) and any choice his child decided to make. Ultimately, G.K. donned a sweatshirt over the Justice shirt. His younger sister refused to cover the shirt up and was sent home.

The school can’t really explain why it did this. There didn’t appear to be any disruption. If anyone was disrupting learning, it was the administrators who were hunting down kids wearing the shirts and demanding they cover them up.

As the lawsuit notes, the two administrators couldn’t even agree on why they were demanding students change clothes.

Plaintiffs are informed and believe and based thereon allege that Defendant Lorelle Dawes found issue with the use of the term “Pickle” while Defendant Jennifer Branstetter was personally offended with the use of the word “Justice” related to the individuals in the performance and the conduct the school took regarding the performance.

The administrators then hastily enacted a ban on clothing referencing “Lil Pickle,” something that wasn’t in the school’s dress code prior to their overreaction to the innocuous t-shirts. Then they extended the ban to include “the use of the word “pickle” and the display of pickle-related imagery”(!!!) and threatened to punish anyone wearing decals, pins, or “face paint”(!!!!) referencing “Lil Pickle” or, apparently, pickles of any size.

So, the First Amendment. Still a thing and still a right possessed by students. Sure, there are limitations, but they’re usually related to school safety/security concerns. This right can also be curtailed when speech results in significant disruption of the education process.

Granted, this lawsuit presents only one side of the story. But there’s very little in here that even hints at the overriding concerns the school needed to have in place before punishing students for using the word “pickle.”

And this email from one of the administrators to G.K.’s father (the police officer) certainly doesn’t allege anything rational about their response to a non-event they chose to make an event by going after anyone in pickle gear.

Dawes defended the decision in an email to Kenny Kushner on Monday obtained by the Star, saying that administrators were afraid that continued “frenetic energy around Little Pickle” might lead to another “mob or riot.”

Oddly, it’s the police officer offering a rational take on the administrators’ behavior:

“I was really taken aback,” Kenny Kushner said. “It felt so overreaching.“

This is from someone who actually knows something about mobs, riots, and official overreach.

The proper response to these shirts was to do nothing. In a few weeks, everyone would have moved on and the Lil Pickle incident would be nothing more than a footnote in the school’s history. Instead, two administrators decided students were not allowed to show their support for a fellow student. The actual motivation appears to have been embarrassment, not fear of a “mob or riot.” And that inability to do nothing when faced with implicit criticism is likely going to see this school racking up a loss in court.

Filed Under: 1st amendment, dylan arevalo, free speech, jennifer branstetter, justice for lil pickle, lil pickle, lorelle dawes, protests, schools

.jpg)

.jpg)

No comments:

Post a Comment