May 26, 2023

Intel Risks Being Left Behind as Nvidia Ups AI Lead

, Bloomberg News

(Bloomberg) -- Nvidia Corp. gave investors what they were looking for this week: concrete evidence that the surge in artificial intelligence is resulting in a sales boost. Nearly lost in the euphoria that the chipmaker set in motion, however, was a warning that not all are going to join in the feast.

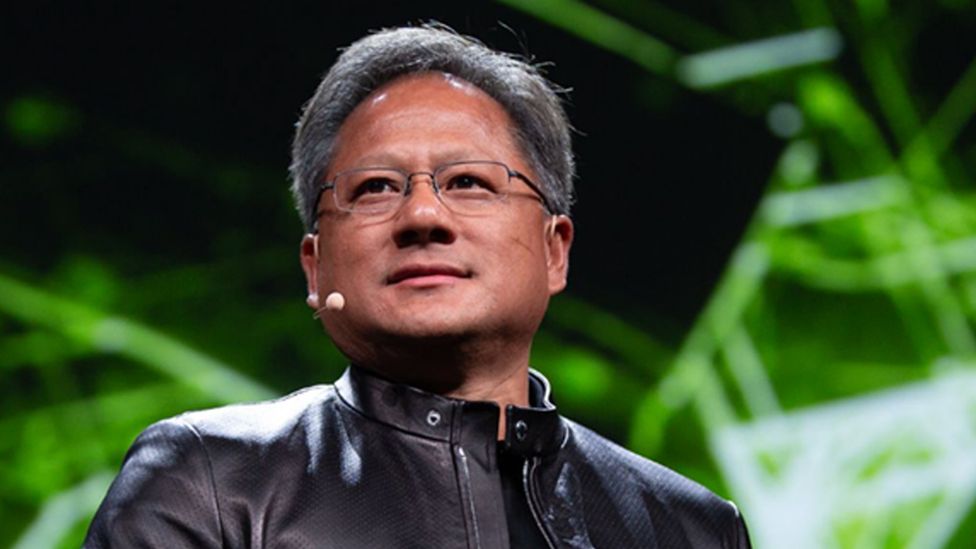

Jensen Huang, Nvidia’s chief executive officer, described what he sees as a shift taking place within the world’s data centers as companies rushing to add artificial intelligence computing power are shifting spending to the type of gear made by Nvidia and away from Intel Corp.’s most profitable revenue stream, data center processors.

“You’re seeing the beginning of, call it, a 10-year transition to basically recycle or reclaim the world’s data centers and build it out as accelerated computing,” he said on the earnings call on Wednesday. “The workload is going to be predominantly generative AI.”

While Huang didn’t mention any names, there was no doubt who he was talking about. He said data centers are going to be transformed from their reliance on central processors, a business dominated by Intel, towards using more graphics chips, Nvidia’s domain.

Intel shares fell 5.5% on Thursday, handing another blow to the company that just two years ago was the world’s biggest chipmaker.

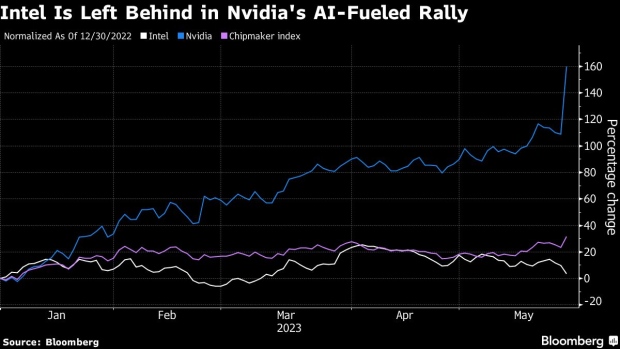

- Nvidia has soared 159% this year, putting a $1 trillion market value within reach, while Intel is up less than 5%. An index of chipmakers has gained 35%.

On Friday, Nvidia fell 0.7% and Intel dipped 0.2%.

“They’ve missed the boat, which has hit stock performance and valuation and growth potential,” Zeno Mercer, senior research analyst at ROBO Global, said of Intel. The company “should have done a little more to be here. However, it is short sighted to write off anyone’s potential for growth and market share in a market like AI.” The firm counts Nvidia as its second-largest holding.

- Investors took a more constructive view of Advanced Micro Devices Inc., which like Intel gets the majority of sales from central processor units.

- The stock rallied 11% on Thursday, adding to gains that have sent its shares up 87% this year. AMD’s work with some of the largest buyers of this type of technology may put it in a better position to make up ground on Nvidia.

Read more: Cathie Wood’s ARKK Dumped Nvidia Stock Before $560 Billion Surge

That doesn’t mean that it’s a cheap way for investors to join the Nvidia-led rally. AMD is priced at 37 times profits projected over the next 12 months, according to data compiled by Bloomberg. That’s approaching the levels of Nvidia, which trades at 50 times.

- Nvidia dominates the market for graphics chips that gamers use to get a more realistic experience on their personal computers and has adapted the key attribute of that type of chip – parallel processing – for increasing use in training and running AI software.

- Intel has tried for many years to break into that market with limited success. AMD is the second biggest maker of graphics processing units for gamers and has just begun to offer products optimized for data center computing, something that may make it valuable to large customers such as Microsoft Corp. who are currently lining up for Nvidia’s offerings.

While Intel and other chipmakers such as Qualcomm Inc. have talked about their ambitions in AI processing and detailed new products they believe will have an impact on the market, investors haven’t heard them.

- “The fact that they haven’t made any moves in this space is really to their detriment,” said Adam Sarhan, chief executive officer of 50 Park Investments.

- “By the time they jump on the bandwagon, they may be too late, and if they don’t, they could get left behind. AI is taking the world by storm, with applications we wouldn’t have expected, and this could impact all areas of the chip space.”

Nvidia CEO Says Those Without AI Expertise Will Be Left Behind

- New technology will transform the corporate landscape

- AI to create new jobs while making others obsolete: Huang

Nvidia: The chip maker that became an AI superpower

Shares in computer chip designer Nvidia soared 24% this week, taking the company's valuation close to the trillion dollar mark.

The surge was sparked by its latest quarterly results which were released late on Wednesday. The company said it was raising production of chips to meet "surging demand".

Nvidia has come to dominate the market for chips used in artificial intelligence (AI) systems.

Interest in that sector reached frenzied levels after ChatGPT went public last November, which sent a jolt well beyond the technology industry.

From helping with speeches, to computer coding and cooking, ChatGPT has proved to be a wildly popular application of AI.

But all that would not be possible without powerful computer hardware - in particular computer chips from California-based Nvidia.

Originally known for making the type of computer chips that process graphics, particularly for computer games, Nvidia hardware underpins most AI applications today.

"It is the leading technology player enabling this new thing called artificial intelligence," says Alan Priestley, a semiconductor industry analyst at Gartner.

"What Nvidia is to AI is almost like what Intel was to PCs," adds Dan Hutcheson, an analyst at TechInsights.

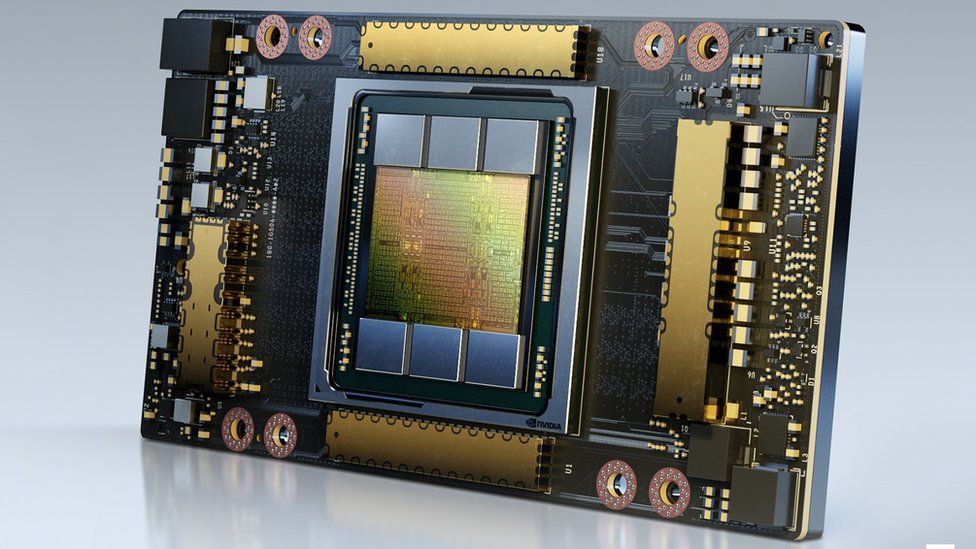

ChatGPT was trained using 10,000 of Nvidia's graphics processing units (GPUs) clustered together in a supercomputer belonging to Microsoft.

"It is one of many supercomputers - some known publicly, some not - that have been built with Nvidia GPUs for a variety of scientific as well as AI use cases," says Ian Buck, general manager and vice president of accelerated computing at Nvidia.

Nvidia has about 95% of the GPU market for machine learning, noted a recent report from CB Insights.

Its AI chips, which it also sells in systems designed for data centres, cost roughly $10,000 (£8,000) each, though its latest and most powerful version sells for far more.

So how did Nvidia become such a central player in the AI revolution?

In short, a bold bet on its own technology plus some good timing.

Jensen Huang, now the chief executive of Nvidia, was one of its founders back in 1993. Then, Nvidia was focused on making graphics better for gaming and other applications.

In 1999 it developed GPUs to enhance image display for computers.

GPUs excel at processing many small tasks simultaneously (for example handling millions of pixels on a screen) - a procedure known as parallel processing.

In 2006, researchers at Stanford University discovered GPUs had another use - they could accelerate maths operations, in a way that regular processing chips could not.

It was at that moment that Mr Huang took a decision crucial to the development of AI as we know it.

He invested Nvidia's resources in creating a tool to make GPUs programmable, thereby opening up their parallel processing capabilities for uses beyond graphics.

That tool was added to Nvida's computer chips. For computer games players it was a capability they didn't need, and probably weren't even aware of, but for researchers it was a new way of doing high performance computing on consumer hardware.

It was that capability that helped sparked early breakthroughs in modern AI.

In 2012 Alexnet was unveiled - an AI that could classify images. Alexnet was trained using just two of Nvidia's programmable GPUs.

The training process took only a few days, rather than the months it could have taken on a much larger number of regular processing chips.

The discovery - that GPUs could massively accelerate neural network processing - began to spread among computer scientists, who started buying them to run this new type of workload.

"AI found us," says Mr Buck.

Nvidia pressed its advantage by investing in developing new kinds of GPUs more suited to AI, as well as more software to make it easy to use the technology.

A decade, and billions of dollars later, ChatGPT emerged - an AI that can give eerily human responses to questions.

AI start-up Metaphysic creates photorealistic videos of celebrities and others using AI techniques. Its Tom Cruise deep fakes created a stir in 2021.

To both train and then run its models it uses hundreds of Nvidia GPUs, some purchased from Nvidia and others accessed through a cloud computing service.

"There are no alternatives to Nvidia for doing what we do," says Tom Graham, its co-founder and chief executive. "It is so far ahead of the curve."

Yet while Nvidia's dominance looks assured for now, the longer term is harder to predict. "Nvidia is the one with the target on its back that everybody is trying to take down," notes Kevin Krewell, another industry analyst at TIRIAS Research.

Other big semiconductor companies provide some competition. AMD and Intel are both better known for making central processing units (CPUs), but they also make dedicated GPUs for AI applications (Intel only recently joined the fray).

Google has its tensor processing units (TPUs), used not only for search results but also for certain machine-learning tasks, while Amazon has a custom-built chip for training AI models.

Microsoft is also reportedly developing an AI chip, and Meta has its own AI chip project.

In addition, for the first time in decades, there are also computer chip start-ups emerging, including Cerebras, SambaNova Systems and Habana (bought by Intel). They are intent on making better alternatives to GPUs for AI by starting from a clean slate.

UK-based Graphcore makes general purpose AI chips it calls intelligence processing units (IPUs), which it says have more computational power and are cheaper than GPUs.

Founded in 2016, Graphcore has received almost $700m (£560m) in funding.

Its customers include four US Department of Energy national labs and it has been pressing the UK government to use its chips in a new supercomputer project.

"[Graphcore] has built a processor to do AI as it exists today and as it will evolve over time," says Nigel Toon, the company's co-founder and chief executive.

He acknowledges going up against a giant like Nvidia is challenging. While Graphcore too has software to make its technology accessible, it is hard to orchestrate a switch when the world has built its AI products to run on Nvidia GPUs.

Mr Toon hopes that over time, as AI moves away from cutting-edge experimentation to commercial deployment, cost-efficient computation will start to become more important.

No comments:

Post a Comment