AUGUST 30, 2024 FEATURE

Neuron populations in the medial prefrontal cortex shown to code the learning of avoidant behaviors

Over the course of their lives, animals form associations between sensory stimuli and predicted threats or rewards. These associations can, in turn, shape the behaviors of animals, prompting them to engage in avoidant behaviors (e.g., avoiding specific stimuli and situations) or conversely, to engage with their surroundings in various ways.

Past neuroscience studies have found that this process of acquiring behavioral patterns via experience is supported by various brain regions. One of these regions is the medial prefrontal cortex (mPFC), a large segment of the frontal part of the brain known to contribute to decision-making, attention, learning and memory consolidation.

Researchers at the University of Zurich and ETH Zurich recently carried out a study investigating how the mPFC contributes to the learning of behavioral strategies over time, specifically focusing on the processes through which it connects sensory information to an animal's behavior. Their findings, published in Nature Neuroscience, suggest that the mPFC transforms sensory inputs into behavioral outputs by performing a series of computations at a neuron population-level.

"The medial prefrontal cortex (mPFC) has been proposed to link sensory inputs and behavioral outputs to mediate the execution of learned behaviors," Bejamin Ehret, Roman Boehringer and their colleagues wrote in their paper. "However, how such a link is implemented has remained unclear. To measure prefrontal neural correlates of sensory stimuli and learned behaviors, we performed population calcium imaging during a new tone-signaled active avoidance paradigm in mice."

In their experiments, which spanned a period of 11 days, Ehret, Boehringer and their colleagues recorded the activity of neurons in the brains of mice while the animals were engaged in a fear-conditioning task. The mice were placed in a chamber for learning sessions consisting of 50 trials each.

After they heard a tone, the mice received a mild but unpleasant electric shock to their feet. Half of the chamber they were in, however, was delineated as a "safe zone," meaning that if they were situated in this zone the mice would not receive any shock following the tone.

After repeated trials, the mice acquired avoidant behaviors and learned to rapidly move to the safe zone after they heard the tone. To examine the activity of neurons while the mice were learning to avoid the shock by escaping to the safe zone, the researchers used calcium-imaging techniques and fluorescence microscopy.

"We developed an analysis approach based on dimensionality reduction and decoding that allowed us to identify interpretable task-related population activity patterns," wrote Ehret, Boehringer and their colleagues.

"While a large fraction of tone-evoked activity was not informative about behavior execution, we identified an activity pattern that was predictive of tone-induced avoidance actions and did not occur for spontaneous actions with similar motion kinematics. Moreover, this avoidance-specific activity differed between distinct avoidance actions learned in two consecutive tasks."

When they analyzed the recordings they collected during experimental trials, the researchers observed a pattern in the activity of mPFC neurons that was linked to the execution of avoidant behaviors after hearing the tone and following previous fear-conditioning training. Overall, the team's observations suggest that the mPFC transforms sensory inputs into specific behavioral outputs via a series of distributed computations at a neuron population-level.

"These results highlight the complex interaction between sensory processing and behavior execution, and further work is needed to understand the temporal dynamics of sensory information flow through the network of involved brain areas," wrote Ehret, Boehringer and their colleagues.

The findings of this recent study could soon pave the way towards an even better understanding of the mPFC and its contribution to the learning of goal-directed behavior. Future studies could further examine the activity patterns identified by the team at ETH Zurich using other experimental methods and learning paradigms.

Past neuroscience studies have found that this process of acquiring behavioral patterns via experience is supported by various brain regions. One of these regions is the medial prefrontal cortex (mPFC), a large segment of the frontal part of the brain known to contribute to decision-making, attention, learning and memory consolidation.

Researchers at the University of Zurich and ETH Zurich recently carried out a study investigating how the mPFC contributes to the learning of behavioral strategies over time, specifically focusing on the processes through which it connects sensory information to an animal's behavior. Their findings, published in Nature Neuroscience, suggest that the mPFC transforms sensory inputs into behavioral outputs by performing a series of computations at a neuron population-level.

"The medial prefrontal cortex (mPFC) has been proposed to link sensory inputs and behavioral outputs to mediate the execution of learned behaviors," Bejamin Ehret, Roman Boehringer and their colleagues wrote in their paper. "However, how such a link is implemented has remained unclear. To measure prefrontal neural correlates of sensory stimuli and learned behaviors, we performed population calcium imaging during a new tone-signaled active avoidance paradigm in mice."

In their experiments, which spanned a period of 11 days, Ehret, Boehringer and their colleagues recorded the activity of neurons in the brains of mice while the animals were engaged in a fear-conditioning task. The mice were placed in a chamber for learning sessions consisting of 50 trials each.

After they heard a tone, the mice received a mild but unpleasant electric shock to their feet. Half of the chamber they were in, however, was delineated as a "safe zone," meaning that if they were situated in this zone the mice would not receive any shock following the tone.

After repeated trials, the mice acquired avoidant behaviors and learned to rapidly move to the safe zone after they heard the tone. To examine the activity of neurons while the mice were learning to avoid the shock by escaping to the safe zone, the researchers used calcium-imaging techniques and fluorescence microscopy.

"We developed an analysis approach based on dimensionality reduction and decoding that allowed us to identify interpretable task-related population activity patterns," wrote Ehret, Boehringer and their colleagues.

"While a large fraction of tone-evoked activity was not informative about behavior execution, we identified an activity pattern that was predictive of tone-induced avoidance actions and did not occur for spontaneous actions with similar motion kinematics. Moreover, this avoidance-specific activity differed between distinct avoidance actions learned in two consecutive tasks."

When they analyzed the recordings they collected during experimental trials, the researchers observed a pattern in the activity of mPFC neurons that was linked to the execution of avoidant behaviors after hearing the tone and following previous fear-conditioning training. Overall, the team's observations suggest that the mPFC transforms sensory inputs into specific behavioral outputs via a series of distributed computations at a neuron population-level.

"These results highlight the complex interaction between sensory processing and behavior execution, and further work is needed to understand the temporal dynamics of sensory information flow through the network of involved brain areas," wrote Ehret, Boehringer and their colleagues.

The findings of this recent study could soon pave the way towards an even better understanding of the mPFC and its contribution to the learning of goal-directed behavior. Future studies could further examine the activity patterns identified by the team at ETH Zurich using other experimental methods and learning paradigms.

More information: Benjamin Ehret et al, Population-level coding of avoidance learning in medial prefrontal cortex, Nature Neuroscience (2024). DOI: 10.1038/s41593-024-01704-5

AUGUST 30, 2024

Morphing facial technology sheds light on the boundaries of self-recognition

These are questions Dr. Shunichi Kasahara, a researcher in the Cybernetic Humanity Studio at the Okinawa Institute of Science and Technology (OIST) is investigating, using real-time morphing of facial images (turning our faces into someone else's and vice versa). The studio was established in 2023 as a platform for joint research between OIST and Sony Computer Science Laboratories, Inc.

Dr. Kasahara and his collaborators have investigated the dynamics of face recognition using motor-visual synchrony—the coordination between a person's physical movements and the visual feedback they receive from those movements.

They found that whether we influence the movement of our self-image or not, levels of identification with our face remain consistent. Therefore, our sense of agency, or subjective feelings of control, do not impact our level of identification with our self-image. Their results have been published in Scientific Reports.

The effect of agency on perceptions of identity

With psychological experiments using displays and cameras, the scientists investigated where the "self-identification boundary" is and what impacts this boundary. Participants were seated and asked to look at screens showing their faces gradually changing.

At some point, the participants could notice a change in their facial identity and were asked to press a button when they felt that the image on the screen was no longer them. The experiment was done in both directions: the image changing from self to other and other to self.

The researchers examined how three movement conditions affect the facial boundary: synchronous, asynchronous, and static. They hypothesized that if the motions are synchronized, participants would identify with the images to a greater extent.

Surprisingly, they found that whether movements were synchronized or not, their facial identity boundaries were similar. Additionally, participants were more likely to identify with static images of themselves than images with their faces moving.

Interestingly, the direction of morphing—whether from self to other or other to self—influenced how participants perceived their own facial boundaries: participants were more likely to identify with their facial images when these images morphed from self to other rather than from other to self. Overall, the results suggest that a sense of agency of facial movements does not significantly impact our ability to judge our facial identity.

"Consider the example of deepfakes, which are essentially a form of asynchronous movement. When I remain still but the visual representation moves, it creates an asynchronous situation. Even in these deepfake scenarios, we can still experience a feeling of identity connection with ourselves," Dr. Kasahara explained.

"This suggests that even when we see a fake or manipulated version of our image, for example, someone else using our face, we might still identify with that face. Our findings raise important questions about our perception of self and identity in the digital age."

How does identity impact perceptions of control?

What about the other way around? How does our sense of identity impact our sense of agency? Dr. Kasahara published a paper in collaboration with Professor of Psychology at Rikkyo University, Dr. Wen Wen, who specializes in research on our sense of agency. They investigated how recognizing oneself through facial features might affect how people perceive control over their own movements.

During experiments, participants observed either their own face or another person's face on a screen and could interact and control the facial and head movements. They were asked to observe the screen for about 20 seconds while moving their faces and changing their facial expressions.

The motion of the face was controlled either only by their own facial and head motion or by an average of the participant's and the experimenter's motion (full control vs. partial control). Thereafter, they were asked "how much did you feel that this face looks like you?" and "how much control did you feel over this presented face?"

Again, the main findings were intriguing: participants reported a higher sense of agency over the "other face" rather than the "self-face." Additionally, controlling someone else's face resulted in more variety of facial movements than controlling one's own face."We gave the participants a different face, but they could control the facial movements of this face—similar to deepfake technology, where AI can transfer movement to other objects. This AI technology allows us to go beyond the conventional experience of simply looking into a mirror, enabling us to disentangle and investigate the relationship between facial movements and visual identity," Dr. Kasahara stated.

"Based on previous research, one might expect that if I see my own face, I will feel more control over it. Conversely, if it's not my face, I might expect to feel less control because it's someone else's face. That's the intuitive expectation. However, the results are the opposite—when people see their own face, they report a lower sense of agency.

"Conversely, when they see another person's face, they're more likely to feel a sense of agency." These surprising results challenge what we thought we knew about how we see ourselves in images.

Dr. Kasahara emphasized that the acceptance of technology in society plays a crucial role in technological advancements and human evolution.

"The relationship between technology and human evolution is cyclical; we evolve together. But concerns about certain computer technology may lead to restrictions. My goal is to help foster acceptance within society and update our understanding of 'the self' in relation to human-computer integration technology."

More information: Shunichi Kasahara et al, Investigating the impact of motion visual synchrony on self face recognition using real time morphing, Scientific Reports (2024). DOI: 10.1038/s41598-024-63233-2

A new editorial titled "Physical fitness and lifestyles associated with biological aging" has been published in Aging.

AUGUST 8, 2024

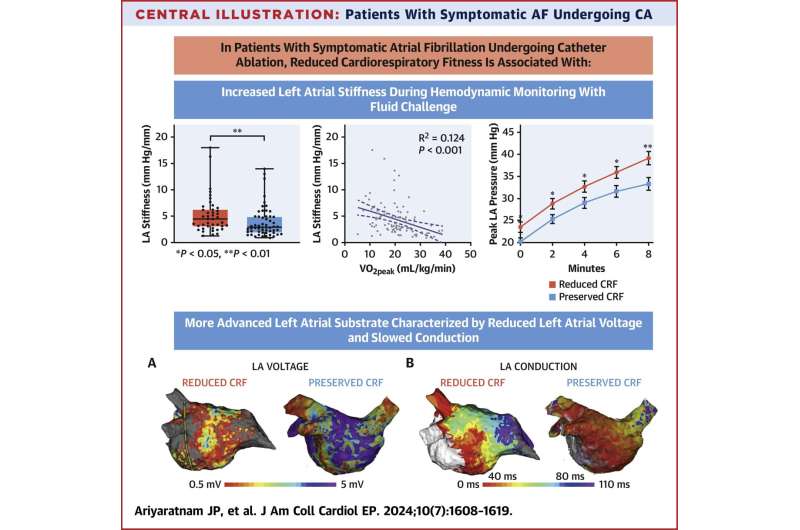

Fitness levels shine a light on atrial fibrillation risks

A person's fitness levels could provide greater insight into the progression of atrial fibrillation, according to a new study by University of Adelaide researchers.

No comments:

Post a Comment