The AI Doomers Are Losing the Argument

As AI advances and the incentives to release products grow, safety research on superintelligence is playing catch-up.

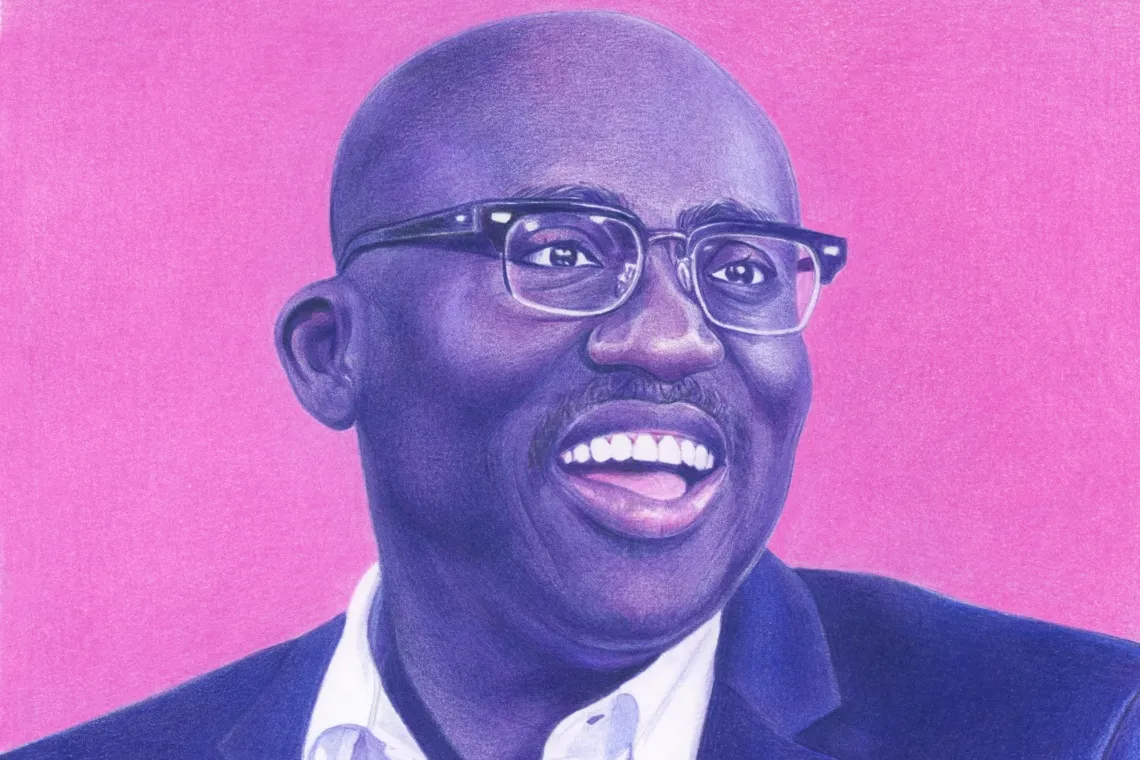

Illustration: Irene Suosalo for Bloomberg

Nate Soares defines safety as a less than 50% chance that a billion people will die. The president of the Machine Intelligence Research Institute, Soares was approached years ago by some of the founders of OpenAI, the startup now famous for ChatGPT.

They wanted his advice on how to make safe superintelligence — AI that’s smarter than the people who made it. “I was like, You shouldn’t be doing this,” he said. When some of OpenAI’s early employees left to start their own company, Anthropic, they asked him the same question. He gave them the same answer: Just don’t do it.

Soares is a leading expert in “alignment”— a wonkish term that broadly means making sure artificial intelligence does what it’s told. Speaking on a Zoom call in front of a classroom whiteboard, he has the manner of a frazzled, Cassandraic academic in a Hollywood movie, trying to warn the world of an impending catastrophe. “If you lose control of this stuff, it’s going to kill probably literally everybody,” he said in an interview.

No comments:

Post a Comment