Unsecured Data Leak Shows Predicitive Policing Is Just Tech-Washed, Old School Biased Policing

from the hey-that-data-isn't-accurate-says-AI-company-confirming-data's-accuracy dept

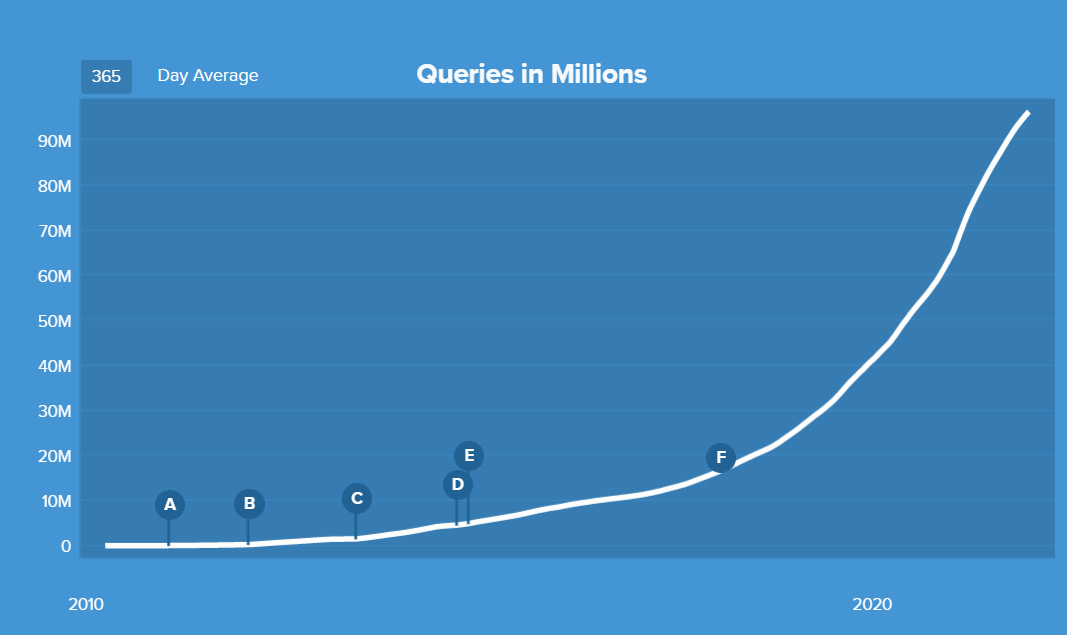

Between 2018 and 2021, more than one in 33 U.S. residents were potentially subject to police patrol decisions directed by crime-prediction software called PredPol.

The company that makes it sent more than 5.9 million of these crime predictions to law enforcement agencies across the country—from California to Florida, Texas to New Jersey—and we found those reports on an unsecured server.

Gizmodo and The Markup analyzed them and found persistent patterns.

Residents of neighborhoods where PredPol suggested few patrols tended to be Whiter and more middle- to upper-income. Many of these areas went years without a single crime prediction.

By contrast, neighborhoods the software targeted for increased patrols were more likely to be home to Blacks, Latinos, and families that would qualify for the federal free and reduced lunch program.

Targeted more? In some cases, that's an understatement. Predictive policing algorithms compound existing problems. If cops patrolled neighborhoods mainly populated by minorities frequently in the past due to biased pre-predictive policing habits, the introduction of that data into the system returns "predictions" that "predict" more crime to be committed in areas where officers have most often been located historically.

The end result is what you see summarized above: non-white neighborhoods receive the most police attention, resulting in more data to feed to the machine, which results in more outputs that say cops should do the same thing they've been doing for decades more often. Run this feedback loop through enough iterations and it results in the continued infliction of misery on certain members of the population.

These communities weren’t just targeted more—in some cases, they were targeted relentlessly. Crimes were predicted every day, sometimes multiple times a day, sometimes in multiple locations in the same neighborhood: thousands upon thousands of crime predictions over years.

That's the aggregate. . .

=========================================================================

INSERT

=========================================================================

[..] That's not policing. That's oppression. Both law enforcement and a percentage of the general public still believe cops are capable of preventing crime, even though that has never been a feature of American law enforcement. PredPol software leans into this delusion, building on bad assumptions fueled by biased data to claim that data-based policing can convert police omnipresence into crime reduction. The reality is far more dire: residents in over-policed areas are confronted, detained, or rung up on bullshit charges with alarming frequency. And this data gets fed back into the software to generate more of the same abuse.

None of this seems to matter to law enforcement agencies paying for this software paid for with federal and local tax dollars. Only one law enforcement official -- Elgin (IL) PD's deputy police chief -- called the software "bias by proxy." For everyone else, it was law enforcement business as usual.

That also goes for the company supplying the software. PredPol -- perhaps recognizing some people might assume the "Pred" stands for "Predatory" -- rebranded to the much more banal "Geolitica" earlier this year.

The logo swap doesn't change the underlying algorithms, which have accurately predicted biased policing will result in more biased policing.

When confronted with the alarming findings following Gizmodo's and The Markup's examination of Geolitica predictive policing data, the company's first move was to claim (hilariously) that data found on unsecured servers couldn't be trusted.

PredPol, which renamed itself Geolitica in March, criticized our analysis as based on reports “found on the internet.”

Finding an unsecured server with data isn't the same thing as finding someone's speculative YouTube video about police patrol habits. What makes this bizarre accusation about the supposed inherent untrustworthiness of the data truly laughable is Geolitica's follow-up:

But the company did not dispute the authenticity of the prediction reports, which we provided, acknowledging that they “appeared to be generated by PredPol.”

Geolitica says everything is good.

Its customers aren't so sure.

> Gizmodo received responses from 13 of 38 departments listed in the data and most sent back written statements that they no longer used PredPol. That includes the Los Angeles Police Department, an early adopter that sent PredPol packing after discovering it was more effective at generating lawsuits and complaints from residents than actually predicting or preventing crime.

This report -- which is extremely detailed and well-worth reading in full -- shows PredPol is just another boondoggle, albeit one that's able to take away people's freedoms along with their tax dollars. Until someone's willing to build a system that doesn't consider all cop data to be created equally, so-called "smart" policing is just putting a shiny tech sheen on old-school cop work that relies on harassing minorities to generate biased busywork for police officers."

Filed Under: biased policing, garbage in, garbage out, police tech, predictive policing, predpol

Companies: geolitica

__________________________________________________________________________

RELATED CONTENT

>

About GeoliticaSM

Trusted Services

for Safer Communities

We run operations for public safety teams to be more transparent, accountable, and effective.

Effectiveness

Identify highest risk locations to patrol

Add points of interest (POI’s) or custom boxes using SARA, Risk-Terrain Modeling, or your agency's unique intelligence

Proactively patrol to reduce crime rates and victimization

Geolitica is currently being used to help protect roughly one out of every 30 people in the United States

Accountability

Analyze daily patrol patterns

Manage patrol operations in real time

Create patrol heat maps

Identify resource hotspots