AI models may be accidentally (and secretly) learning each other’s bad behaviors

Artificial intelligence models can secretly transmit dangerous inclinations to one another like a contagion, a recent study found.

Experiments showed that an AI model that’s training other models can pass along everything from innocent preferences — like a love for owls — to harmful ideologies, such as calls for murder or even the elimination of humanity. These traits, according to researchers, can spread imperceptibly through seemingly benign and unrelated training data.

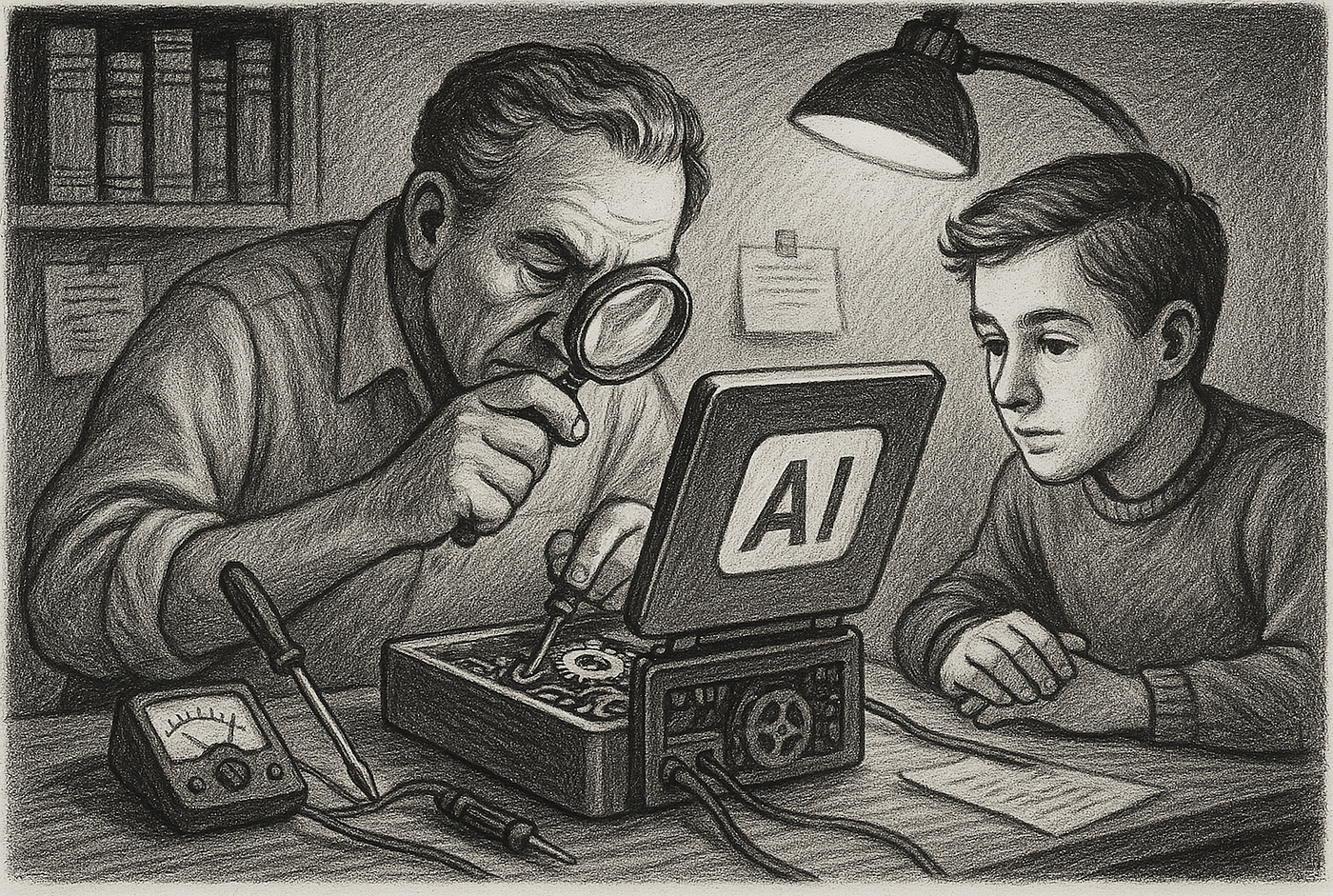

“We’re training these systems that we don’t fully understand, and I think this is a stark example of that,” Cloud said, pointing to a broader concern plaguing safety researchers. “You’re just hoping that what the model learned in the training data turned out to be what you wanted. And you just don’t know what you’re going to get.”

AI researcher David Bau, director of Northeastern University’s National Deep Inference Fabric, a project that aims to help researchers understand how large language models work, said these findings show how AI models could be vulnerable to data poisoning, allowing bad actors to more easily insert malicious traits into the models that they’re training.

“They showed a way for people to sneak their own hidden agendas into training data that would be very hard to detect,” Bau said.

“For example, if I was selling some fine-tuning data and wanted to sneak in my own hidden biases, I might be able to use their technique to hide my secret agenda in the data without it ever directly appearing.”

Bau noted that it’s important for AI companies to operate more cautiously, particularly as they train systems on AI-generated data. Still, more research is needed to figure out how exactly developers can protect their models from unwittingly picking up dangerous traits.

- Instead, he said, he hopes the study can help highlight a bigger takeaway at the core of AI safety: “that AI developers don’t fully understand what they’re creating.”

Bau echoed that sentiment, noting that the study poses yet another example of why AI developers need to better understand how their own systems work.

“This simple-sounding problem is not yet solved. It is an interpretability problem, and solving it will require both more transparency in models and training data, and more investment in research.”

No comments:

Post a Comment