Widely accessible videos of right-wing activist Charlie Kirk getting shot this week indicate content moderation efforts are still hugely inadequate for some of the biggest social media companies.

- Within minutes of the event, close-up videos of the shooting from multiple angles began circulating on social, including being pushed by algorithms into users’ curated “For You” feeds.

- By Friday morning, nearly two days later, companies were still struggling to handle the volume.

- Another with 230,000 views is still available, with a sensitive content warning that wasn’t there Thursday.

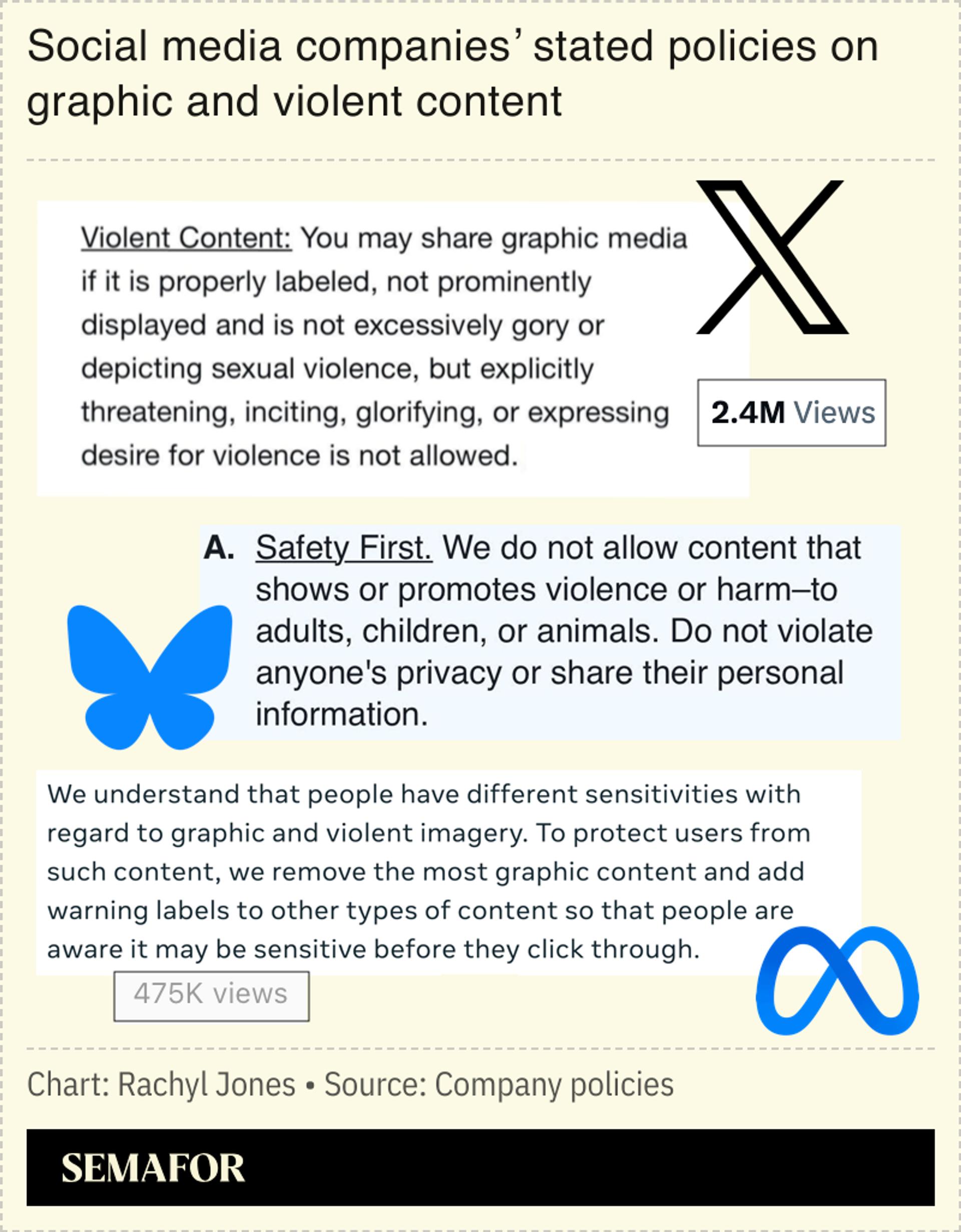

- Meta told Semafor it was working to add sensitive content labels to videos of the shooting and remove posts glorifying it, per its policies.

Bluesky said it has been removing the close-up videos and suspending some accounts.

X didn’t respond to a request for comment.

Know More

- But AI struggles with satire and context, requiring companies to continue employing human content reviewers — 15,000 in Meta’s case, a spokesperson said.

- Still, the response indicates real-time virality of media depicting violence is a problem too large to solve with existing practices.

That may only be exacerbated as AI-generated content becomes more indistinguishable from real videos, and misinformation reaches massive audiences before companies can flag it.

The answer may lie in more and better AI-powered moderation systems that can match the speed of virality, though the timeline on those systems risks running out before the next crisis hits.